Fast, Frictionless, and Secure: Explore our 120+ Connectors Portfolio | Join Webinar!

Gain visibility and centralize operations with an Apache Kafka® GUI

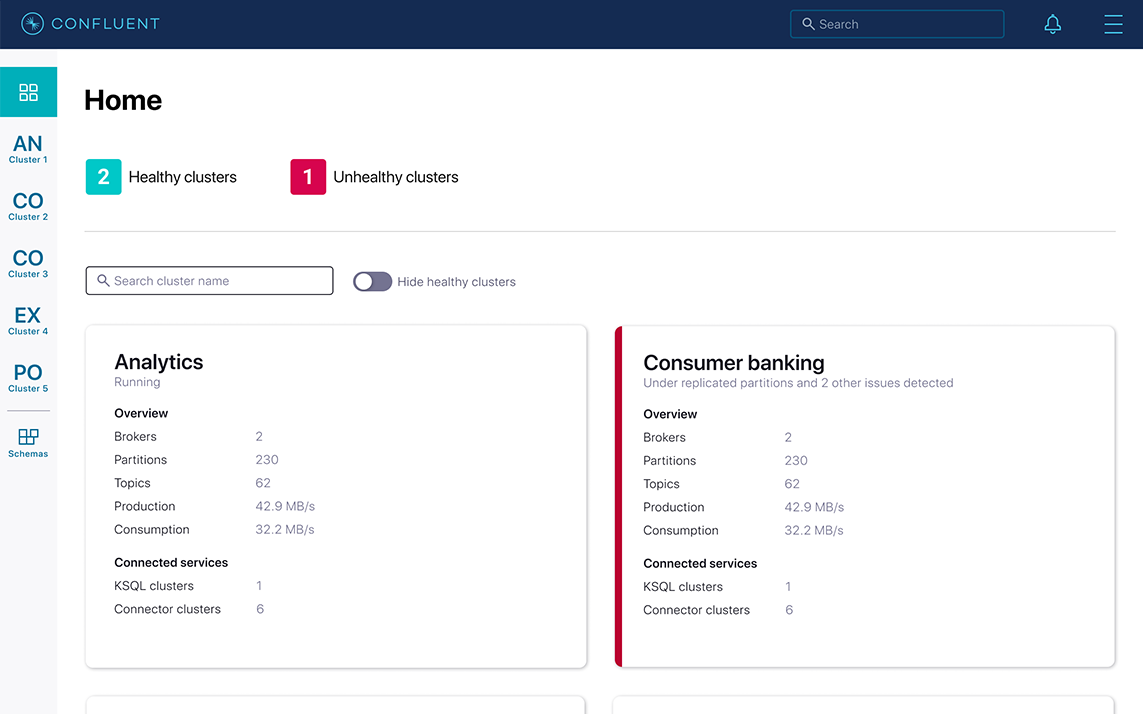

Confluent Platform offers intuitive GUIs for managing and monitoring Apache Kafka®. These tools allow developers and operators to centrally manage and control key components of the platform, maintain and optimize cluster health, and use intelligent alerts to reduce downtime by identifying potential issues before they occur.

For those who want to leverage a cloud-based monitoring and alerting solution, you can ensure system health and high availability using Health+. Read the announcement blog.

Features

Control Center

Control Center is a self-hosted GUI with expert-designed dashboards to enable centralized management and monitoring of key components of the platform, including clusters, brokers, schemas, topics, messages, connectors, ksqlDB queries, security, replication and more.

Health+

Health+ is a Confluent-hosted, web-based GUI that offers intelligent alerting and monitoring tools to reduce the risk of downtime, streamline troubleshooting, surface key metrics, and accelerate issue resolution. It helps offload monitoring costs related to storing historical data and is built on Confluent’s deep understanding of data in motion infrastructure.

Accelerate application development and integration

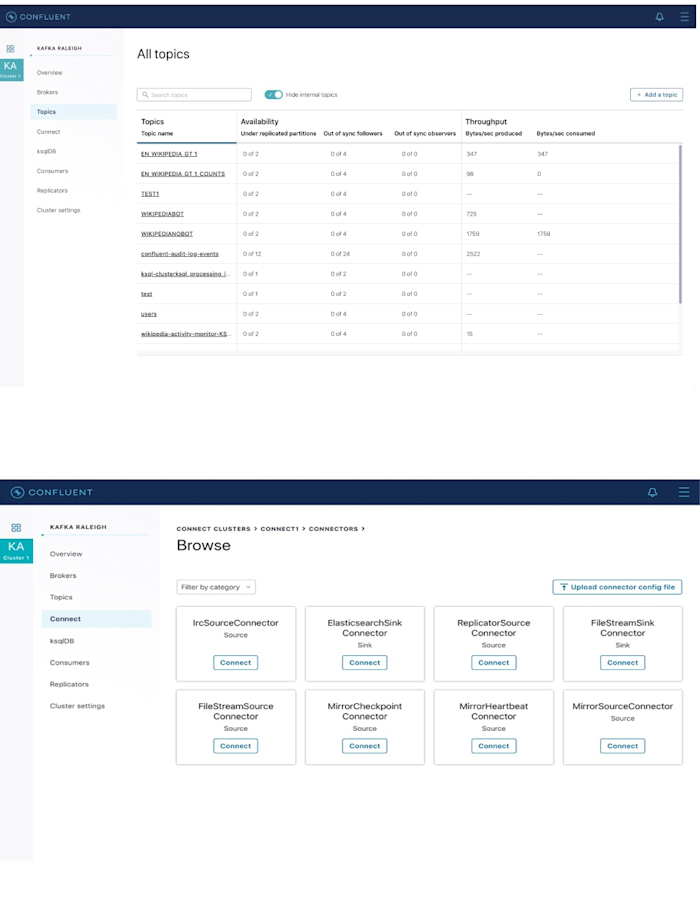

Management of messages, topics and schemas

Observe and control messages, topics, and Schema Registry, all in a centralized way with a single Kafka UI:

- Messages: browse messages, and search offsets or timestamps by partition

- Topics: create, edit, delete and view, all topics in one place

- Schema Registry: create, edit and view topic schemas, and compare schema versions

Management of Kafka connectors

Centrally manage all your connectors that are built on the Kafka Connect framework in one place:

- View a summary of all Kafka Connect clusters

- Search by name and ID

- Add, edit and delete connectors

- Support multiple Connect clusters at a time

Management of ksqlDB

Integrate with ksqlDB so that you and your developers can:

- View a summary of all ksqlDB clusters

- Develop and run queries

- Support multiple ksqlDB clusters at a time

Centralize Kafka management operations

Scalable security management

Simplify security management for the entire organization through integration with RBAC. This allows you to view and manage access permissions for yourself and others.

Dynamic broker configuration

Simplify at-scale operations using a centralized dashboard to view broker configurations and modify them dynamically without resorting to rolling restarts.

Centralized cluster management

Centralize operations not only for multiple Kafka clusters, but also for multiple Connect, ksqlDB, and Schema Registry clusters.

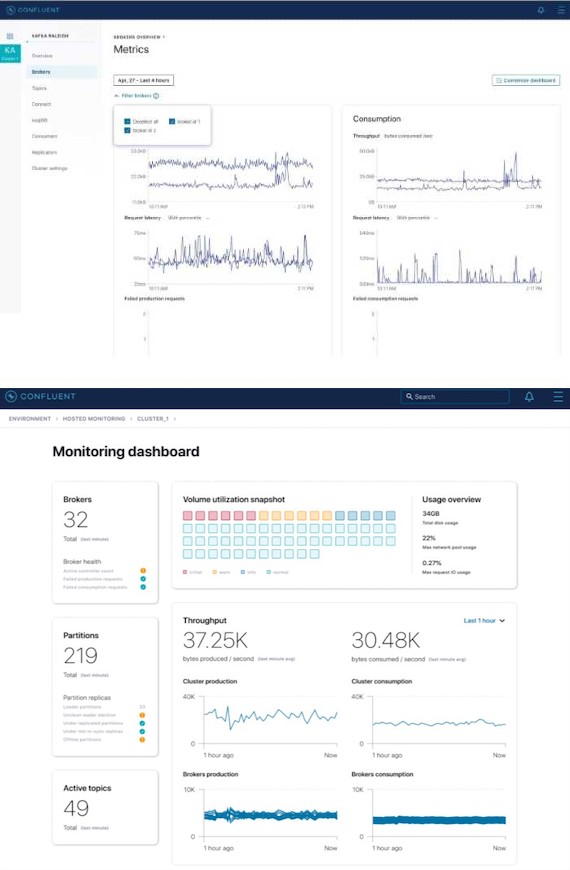

Track key metrics and monitor system health

Assured message delivery

Track the key performance indicators for Apache Kafka. Use intuitive charts to track and receive alerts for:

- Production and consumption metrics

- Throughput

- Request latency

- Failed requests

- Consumer lag

Real-time and historical visualizations

View all your critical health metrics over time through a self-hosted or scalable, cloud-based solution. Gain visibility into:

- Broker and ZooKeeper uptime

- Under replicated partitions

- Out of sync replicas

- Disk usage and distribution

Monitoring of multi-site deployments

Monitor replication tasks directly from the GUI through integration with Multi-Region Clusters and Replicator. This allows you to track key metrics such as throughput and lag.

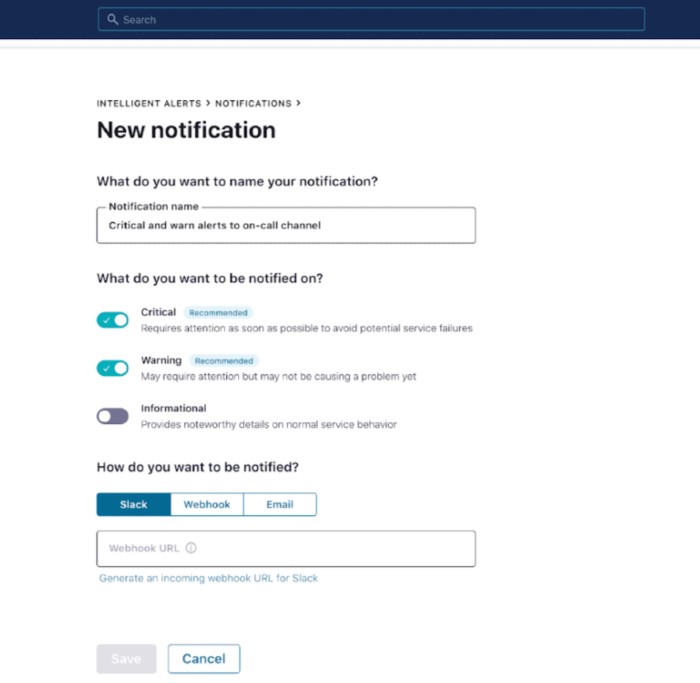

Simplify Kafka troubleshooting

Identification of problems before they occur

Keep your clusters running smoothly and ensure high availability with intelligent alerts based on expert-tested rules and algorithms.

Confluent-backed recommendations

Optimize your deployments based on best practices from the inventors of Apache Kafka, tuned from managing over 5,000 clusters in Confluent Cloud.

Streamlined support experience

Expedite issue resolution time through a streamlined support experience by providing Confluent experts with secure access to historical metadata without manual data entry.