Introducing Connector Private Networking: Join The Upcoming Webinar!

May Preview Release: Advancing KSQL and Schema Registry

Get started with Confluent, for free

Watch demo: Kafka streaming in 10 minutes

We are very excited to announce the Confluent Platform May 2018 Preview release! The May Preview introduces powerful new capabilities for KSQL and the Schema Registry UI. Read on to learn more, and remember to share your feedback and help shape Confluent software! You can do that by visiting the Confluent Community Slack channel (particularly the #ksql and #control-center channels) or by contributing to the KSQL project on GitHub, where you can file issues, submit pull requests, and contribute to discussions.

Confluent Control Center

Schema Registry

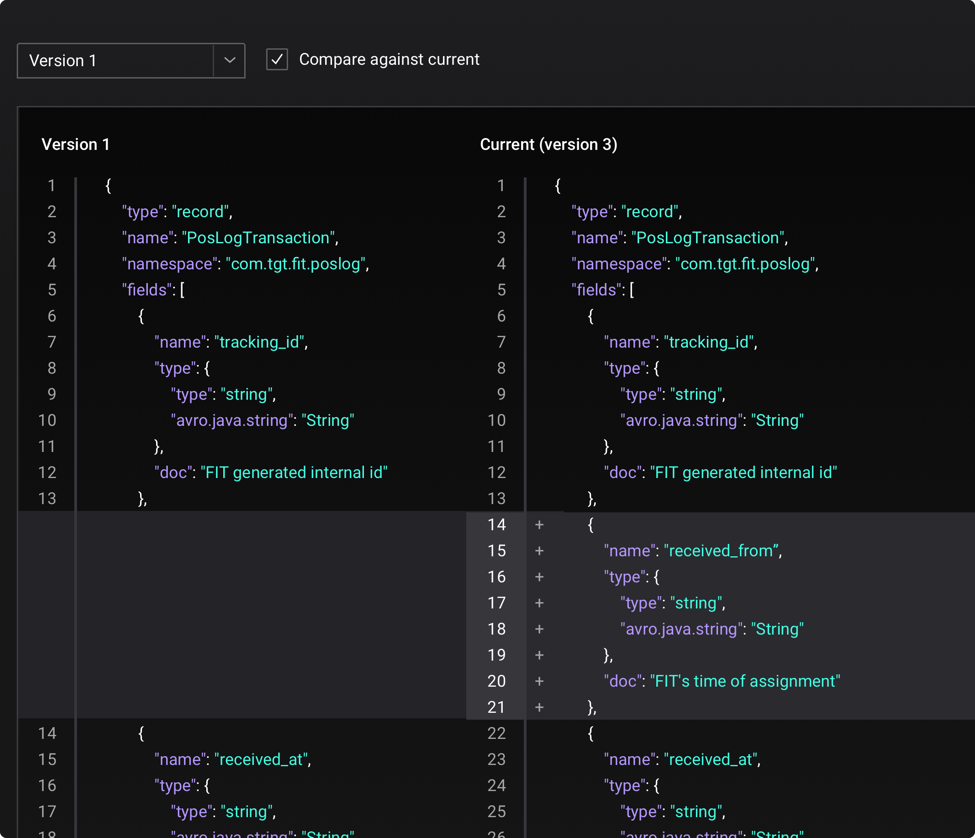

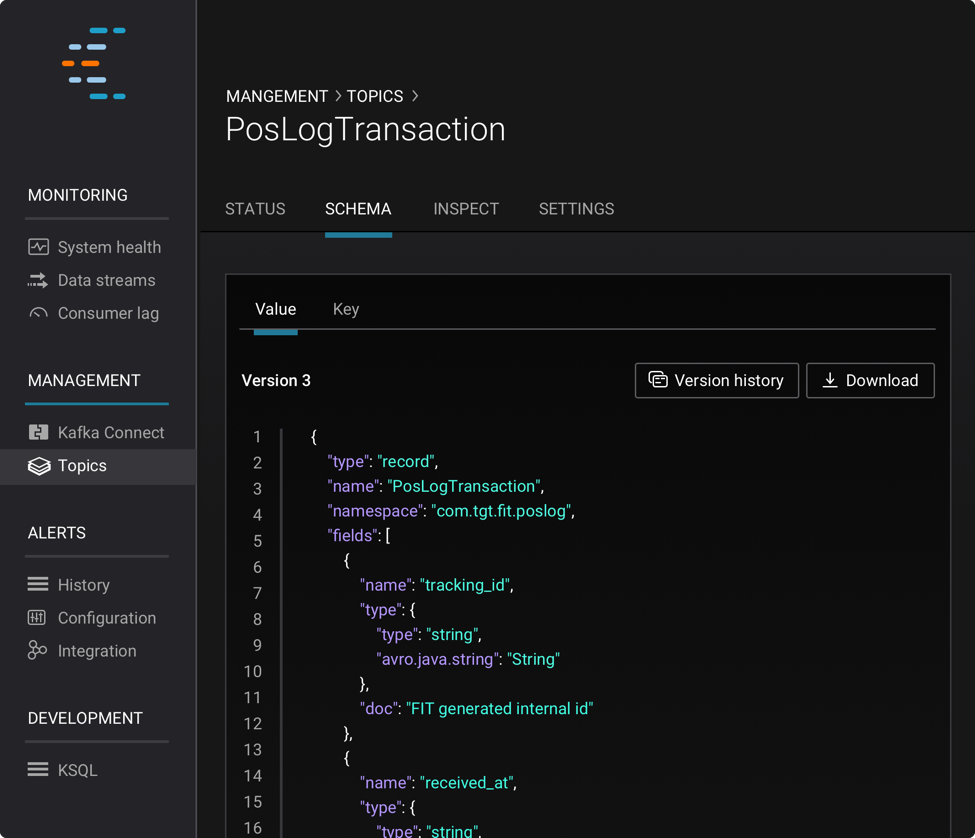

Schema Registry management has been one of the most requested features from our customers. In this preview release, we’re introducing the new Schema Registry UI, which allows users to see the schema per topic, along with its version history, and easily compare between a previous schema and the current one.

The new UI is designed to help the operations team with schema management and allow beginners to learn about Schema Registry.

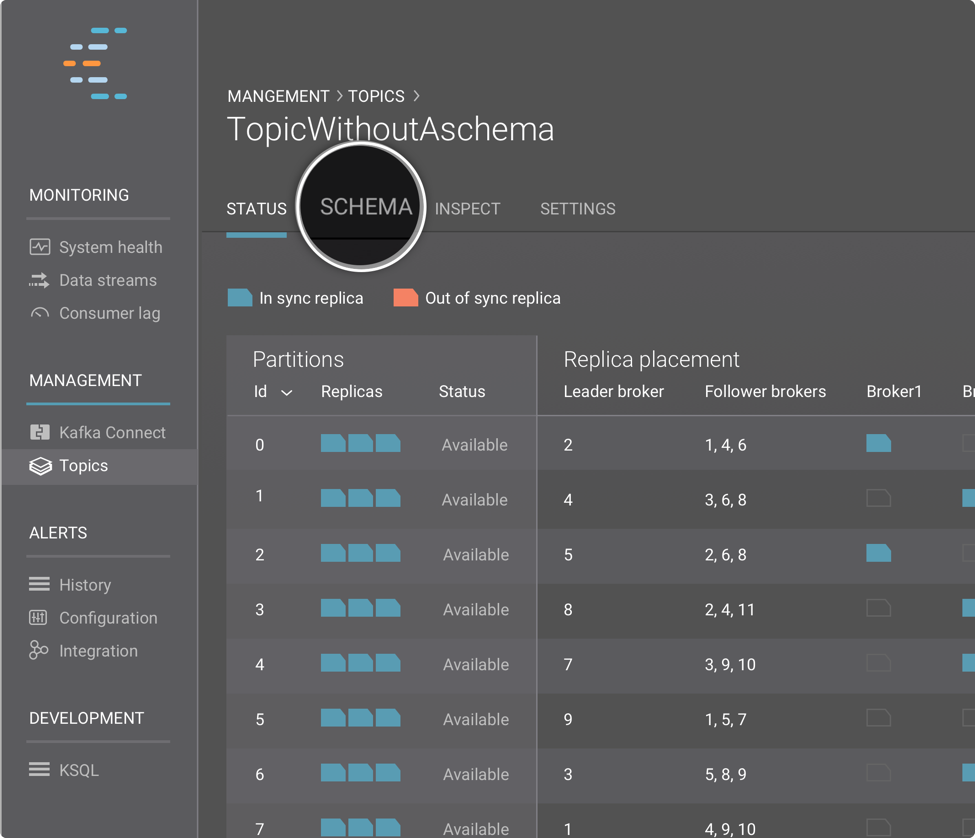

To access the topic’s schema, simply navigate to the new SCHEMA tab in the topic details page or click on ‘•••’ and select “Schema”.

Notice the “Value” and “Key” tabs, where we show the schemas for both the key and value of the messages in the topic. You can view all versions of the schema by clicking on the “Version History” button and compare it against the current version. You can also download the schema by clicking on the “Download” button on the top right.

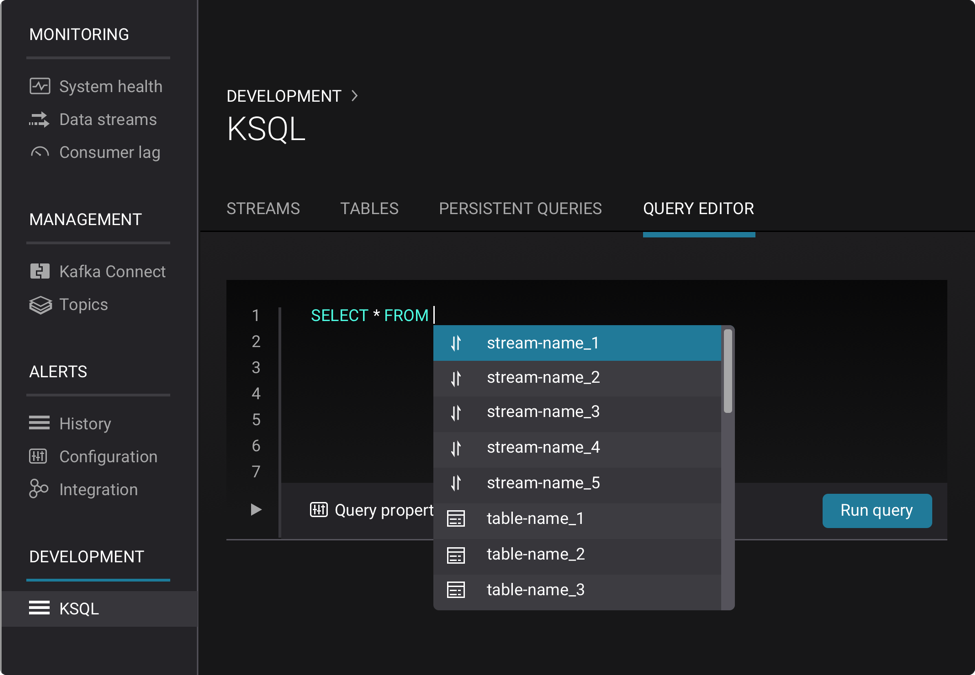

KSQL Editor Supports Autocompletion

We’ve added autocomplete to the KSQL query editor to help you compose queries faster. No more trying to figure out available streams and tables and specific KSQL syntax, autocomplete will help you as you type.

KSQL

INSERT INTO

INSERT INTO is a new statement that lets you write query output into an existing stream. INSERT INTO is currently not supported for tables. You can use INSERT INTO to merge output from multiple queries into a single output stream.

For example, suppose you are a retailer with separate streams for online and in-store sales. You want to compute your daily total sales for different items. You can use INSERT INTO to populate a stream for all sales and aggregate that stream:

CP Docker Images for KSQL

Confluent Platform Docker images are now available for the preview versions of both the KSQL server and KSQL CLI. You can use the confluentinc/cp-ksql-server image to deploy KSQL servers in interactive (default) or headless mode. You can use the confluentinc/cp-ksql-cli image to start a KSQL CLI session inside a Docker container.

Documentation for these images can be found at docs.confluent.io.

Going forward, we’ll continue to release these Docker images for each preview release as well as for each Confluent Platform stable release.

Topic and Schema Cleanup

The DROP statement for streams and tables now supports an option for also deleting the underlying Kafka topic and, for streams and tables in AVRO format, the registered Avro schema. To have DROP clean up topics and schemas, you need to add DELETE TOPIC to your DROP statement:

This lets you ensure you don’t leave topics and schemas around as you create and drop streams and tables. This is helpful particularly during iterative development and testing. If you do want to keep your topics and schemas, say for consumption by some other system, then simply omit the DELETE TOPIC option from your statement:

Where to go from here

Try out the new Confluent Platform May 2018 Preview release and share your feedback! Here’s what you can do to get started:

- Download the Confluent Platform May 2018 Preview release

- Learn how to use the new features by reading the Confluent Platform May 2018 Preview documentation.

- Visit the Confluent Community Slack channel to ask questions or share feedback. To discuss KSQL, visit the #ksql channel. For Control Center, visit the #control-center channel.

- If you are interested in contributing to KSQL, visit the KSQL project on GitHub. Feel free to file issues, submit pull requests, and contribute to discussions.

Get started with Confluent, for free

Watch demo: Kafka streaming in 10 minutes

Avez-vous aimé cet article de blog ? Partagez-le !

Abonnez-vous au blog Confluent

Schema Registry Clients in Action

Learn about the bits and bytes of what happens behind the scenes in the Apache Kafka producer and consumer clients when communicating with the Schema Registry and serializing and deserializing messages.

How to Securely Connect Confluent Cloud with Services on Amazon Web Services (AWS), Azure, and Google Cloud Platform (GCP)

The rise of fully managed cloud services fundamentally changed the technology landscape and introduced benefits like increased flexibility, accelerated deployment, and reduced downtime. Confluent offers a portfolio of fully managed...